Tuesday, February 26, 2013

Bing - Microsoft New Decision Engine

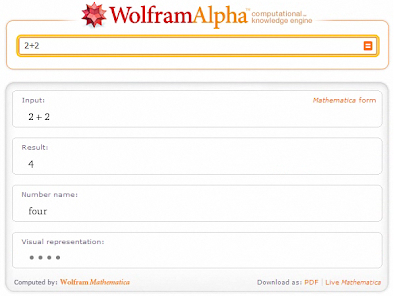

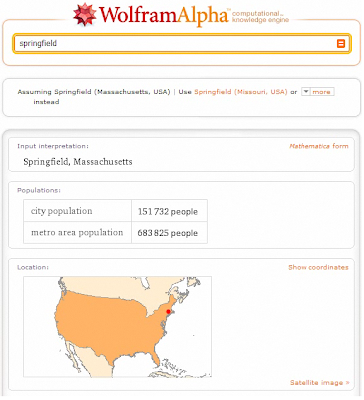

Wolfram Alpha is completely different from Google

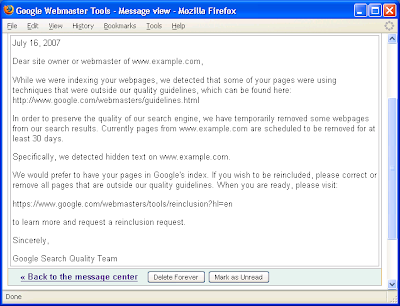

Tips to request reconsideration in Google

Friday, February 22, 2013

Reasons Behind Bad Seo Services

- SEO Professional

- SEM Professional

- SMO Professional

- Web Designer

- Programmer

- Quality Professional

- Leader

- Investor

Google Plans for faster search using Ajax

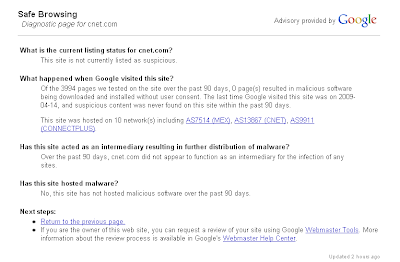

Does your website hacked?

- Website is ranked for spamy words

- Taking more loading time and getting error even host is good

- Suspicious messages from browsers

- Web pages disappeared from the Search engine results

- Homepage is De-indexed from Google

- Present status of your site

- While visiting does google have any problem?

- Does your site distributing malware or not?

- Is your site hosted malware?

- Hidden text and links

- Cloaking urls

- Automated requests to search engines

- Doorway pages

- Duplicate content

- Paid links

- Do follow links for ads

Effective way of Doing Social Bookmarking

- Update entire profile will complete info which looks professional

- Search authorized profiles then vote and comment on their links

- Add Authorized profiles in to your friends

- Build a professional relationship with them

- First to vote and comment on their links

- In result you will get good comments and votes for your links

- Provide different kinds of good links which catches public interest.

- Digg.com

- StumbleUpon.com

- Technorati.com

- Del.icio.us

- Propeller.com

- simpy.com

- Slashdot.org .......... etc...

Monday, February 4, 2013

Are shorten urls SEO friendly? …. Yes

Google Webmaster Tools - SEO Updates 2012

I would like to highlight & include the

summaries of SEO Updates in 2012 which are published on Google webmaster

central official blog.

- Google https search is faster now on modern browser - Mar 19th 2012

- SEO Friendly Pagination algorithm update from Google - Mar 13th 2012

- Better Crawl Errors reporting in Google Webmaster tools - Mar 12th 2012

- Filtering Free hosting spam websites from search results - Mar 06th 2012

- User & Administrator access are now available in GWT - Mar 05th 2012

- Video markup language for better indexation of Videos - Feb 21st 2012

- Optimize your site to handle unexpected traffic growth - Feb 09th 2012

- Webmaster tools sitemaps interface with fresh look - Jan 26th 2012

- Few changes on Top search queries data in Webmaster tools - Jan 25th 2012

- Google Algorithm update to Page layout structure - Jan 19th 2012

- Page Titles Update in Search Results - Jan 12th 2012

1. Faster HTTPs Search:

Google made some changes to its https search. If you are logged in to your gmail account and searching for some thing on Google, When you click on a specific result for a keyword then you will reach that web page little faster compared to earlier https search. Google Team has reduced the latency of https search in modern browser like chrome.

2. SEO Friendly Pagination:

Most of the eCommerce and content portals will usually have pagination issue. Due to pagination most of the duplicate pages will be created with product titles in eCommerce portals and with article titles in content portals. Now Google came up with updated algorithm i.e. following are the two ways to fix pagination issue.

- Insert View all page and use rel="canonical" in sub pagination pages

- Use rel="prev" and rel="next"

3. Upgraded Crawl Errors Reporting:

Crawl Errors is a feature in Webmaster tools which will report all errors in your website. Google webmaster team started detecting new errors and reporting all errors in a better way. Categorized crawl errors in to two types i.e. "Site Errors" and "URL Errors".

4. Filtering Free hosting spam websites:

We know that "few of the hosting providers will offer free hosting services". Due to free hosting most of the spammers will create low quality content websites dynamically. Google started filtering this kind of websites from search results and also requesting free hosting providers to show patterns of spam to Google team, so that Google team can effectively update their algorithm and filter these spam sites from search results effectively.

5. Now Webmaster tools have both admin & user access:

Earlier we do not have an option to give user access to our webmaster tools account to any other user. Only admin access should be shared to other users, which isn't safe. With the latest update we can give webmaster tools user access to other users now onwards.

6. Schema.org Video Markup:

Better page titles in search results

Page titles are an important part of our search results: they’re the

first line of each result and they’re the actual links our searchers

click to reach websites. Our advice to webmasters has always been to

write unique, descriptive page titles (and meta descriptions for the

snippets) to describe to searchers what the page is about.

We use many signals to decide which title to show to users, primarily

the

Page layout algorithm improvement

In our ongoing effort to help you find more high-quality websites in search results, today we’re launching an algorithmic change that looks at the layout of a webpage and the amount of content you see on the page once you click on a result.

As we’ve mentioned previously, we’ve heard complaints from users that if they click on a result and it’s difficult to find the actual content, they aren’t happy with the experience. Rather than scrolling down the page past a slew of ads, users want to see content right away. So sites that don’t have much content “above-the-fold” can be affected by this change. If you click on a website and the part of the website you see first either doesn’t have a lot of visible content above-the-fold or dedicates a large fraction of the site’s initial screen real estate to ads, that’s not a very good user experience. Such sites may not rank as highly going forward.

We understand that placing ads above-the-fold is quite common for many websites; these ads often perform well and help publishers monetize online content. This algorithmic change does not affect sites who place ads above-the-fold to a normal degree, but affects sites that go much further to load the top of the page with ads to an excessive degree or that make it hard to find the actual original content on the page. This new algorithmic improvement tends to impact sites where there is only a small amount of visible content above-the-fold or relevant content is persistently pushed down by large blocks of ads.

This algorithmic change noticeably affects less than 1% of searches globally. That means that in less than one in 100 searches, a typical user might notice a reordering of results on the search page. If you believe that your website has been affected by the page layout algorithm change, consider how your web pages use the area above-the-fold and whether the content on the page is obscured or otherwise hard for users to discern quickly. You can use our Browser Size tool, among many others, to see how your website would look under different screen resolutions.

If you decide to update your page layout, the page layout algorithm will automatically reflect the changes as we re-crawl and process enough pages from your site to assess the changes. How long that takes will depend on several factors, including the number of pages on your site and how efficiently Googlebot can crawl the content. On a typical website, it can take several weeks for Googlebot to crawl and process enough pages to reflect layout changes on the site.

Overall, our advice for publishers continues to be to focus on delivering the best possible user experience on your websites and not to focus on specific algorithm tweaks. This change is just one of the over 500 improvements we expect to roll out to search this year. As always, please post your feedback and questions in our Webmaster Help forum.

Posted by Matt Cutts, Distinguished Engineer

Update to Top Search Queries data

Webmaster level: All

Starting today, we’re updating our Top Search Queries feature to make it

better match expectations about search engine rankings. Previously we

reported the average position of all URLs from your site for a given

query. As of today, we’ll instead average only the top position that a

URL from your site appeared in.

An example

Let’s say Nick searched for [bacon] and URLs from your site appeared in

positions 3, 6, and 12. Jane also searched for [bacon] and URLs from

your site appeared in positions 5 and 9. Previously, we would have

averaged all these positions together and shown an Average Position of

7. Going forward, we’ll only average the highest position your site

appeared in for each search (3 for Nick’s search and 5 for Jane’s

search), for an Average Position of 4.

We anticipate that this new method of calculation will more accurately

match your expectations about how a link's position in Google Search

results should be reported.

How will this affect my Top Search Queries data?

This change will affect your Top Search Queries data going forward. Historical data will not change.

Note that the change in calculation means that the Average Position

metric will usually stay the same or decrease, as we will no longer be

averaging in lower-ranking URLs.

Check out the updated Top Search Queries data in the Your site on the web section of Webmaster Tools. And remember, you can also download Top Search Queries data programmatically!

We look forward to providing you a more representative picture of your Google Search data. Let us know what you think in our Webmaster Forum.

Posted by Chris Anderson, Google Analytics team, and Susan Moskwa, Webmaster Trends Analyst

What’s new with Sitemaps

Webmaster level: All

Sitemaps are a way to tell Google about

pages on your site. Webmaster Tools’ Sitemaps feature gives you

feedback on your submitted Sitemaps, such as how many Sitemap URLs have

been indexed, or whether your Sitemaps have any errors. Recently, we’ve

added even more information! Let’s check it out:

The

Sitemaps page displays details based on content-type. Now statistics

from Web, Videos, Images and News are featured prominently. This lets

you see how many items of each type were submitted (if any), and for

some content types, we also show how many items have been indexed. With

these enhancements, the new Sitemaps page replaces the Video Sitemaps

Labs feature, which will be retired.

Another improvement is the

ability to test a Sitemap. Unlike an actual submission, testing does not

submit your Sitemap to Google as it only checks it for errors. Testing

requires a live fetch by Googlebot and usually takes a few seconds to

complete. Note that the initial testing is not exhaustive and may not

detect all issues; for example, errors that can only be identified once

the URLs are downloaded are not be caught by the test.

In

addition to on-the-spot testing, we’ve got a new way of displaying

errors which better exposes what types of issues a Sitemap contains.

Instead of repeating the same kind of error many times for one Sitemap,

errors and warnings are now grouped, and a few examples are given.

Likewise, for Sitemap index files, we’ve aggregated errors and warnings

from the child Sitemaps that the Sitemap index encloses. No longer will

you need to click through each child Sitemap one by one.

Finally,

we’ve changed the way the “Delete” button works. Now, it removes the

Sitemap from Webmaster Tools, both from your account and the accounts of

the other owners of the site. Be aware that a Sitemap may still be read

or processed by Google even if you delete it from Webmaster Tools. For

example if you reference a Sitemap in your robots.txt file search

engines may still attempt to process the Sitemap. To truly prevent a

Sitemap from being processed, remove the file from your server or block

it via robots.txt.

For more information on Sitemaps in Webmaster Tools and how Sitemaps work, visit our Help Center. If you have any questions, go to Webmaster Help Forum.

Written by Kamila Primke, Software Engineer, Webmaster Tools

Preparing your site for a traffic spike

It’s a moment any site owner both looks forward to, and dreads: a huge surge in traffic to your site (yay!) can often cause your site to crash (boo!). Maybe you’ll create a piece of viral content, or get Slashdotted, or maybe Larry Page will get a tattoo and your site on tech tattoos will be suddenly in vogue.

Many people go online immediately after a noteworthy event—a political debate, the death of a celebrity, or a natural disaster—to get news and information about that event. This can cause a rapid increase in traffic to websites that provide relevant information, and may even cause sites to crash at the moment they’re becoming most popular. While it’s not always possible to anticipate such events, you can prepare your site in a variety of ways so that you’ll be ready to handle a sudden surge in traffic if one should occur:

- Prepare a lightweight version of your site.

Consider maintaining a lightweight version of your website; you can then switch all of your traffic over to this lightweight version if you start to experience a spike in traffic. One good way to do this is to have a mobile version of your site, and to make the mobile site available to desktop/PC users during periods of high traffic. Another low-effort option is to just maintain a lightweight version of your homepage, since the homepage is often the most-requested page of a site as visitors start there and then navigate out to the specific area of the site that they’re interested in. If a particular article or picture on your site has gone viral, you could similarly create a lightweight version of just that page.

A couple tips for creating lightweight pages: - Exclude decorative elements like images or Flash wherever possible; use text instead of images in the site navigation and chrome, and put most of the content in HTML.

- Use static HTML pages rather than dynamic ones; the latter place more load on your servers. You can also cache the static output of dynamic pages to reduce server load.

- Take advantage of stable third-party services.

Another alternative is to host a copy of your site on a third-party service that you know will be able to withstand a heavy stream of traffic. For example, you could create a copy of your site—or a pared-down version with a focus on information relevant to the spike—on a platform like Google Sites or Blogger; use services like Google Docs to host documents or forms; or use a content delivery network (CDN). - Use lightweight file formats.

If you offer downloadable information, try to make the downloaded files as small as possible by using lightweight file formats. For example, offering the same data as a plain text file rather than a PDF can allow users to download the exact same content at a fraction of the filesize (thereby lightening the load on your servers). Also keep in mind that, if it’s not possible to use plain text files, PDFs generated from textual content are more lightweight than PDFs with images in them. Text-based PDFs are also easier for Google to understand and index fully. - Make tabular data available in CSV and XML formats.

If you offer numerical or tabular data (data displayed in tables), we recommend also providing it in CSV and/or XML format. These filetypes are relatively lightweight and make it easy for external developers to use your data in external applications or services in cases where you want the data to reach as many people as possible, such as in the wake of a natural disaster.

Posted by Susan Moskwa, Webmaster Trends Analyst

Using schema.org markup for videos

Webmaster level: All

Videos are one of the most common types of results on Google and we want

to make sure that your videos get indexed. Today, we're also launching

video support for schema.org. Schema.org

is a joint effort between Google, Microsoft, Yahoo! and Yandex and is

now the recommended way to describe videos on the web. The markup is

very simple and can be easily added to most websites.

Adding schema.org video markup

is just like adding any other schema.org data. Simply define an

itemscope, an itemtype=”http://schema.org/VideoObject”, and make sure to

set the name, description, and thumbnailUrl properties. You’ll also

need either the embedURL — the location of the video player — or the

contentURL — the location of the video file. A typical video player with

markup might look like this:

Video: Title

content="http://www.example.com/videoplayer.swf?video=123" />

Video description

Using schema.org markup will not affect any Video Sitemaps or mRSS feeds

you're already using. In fact, we still recommend that you also use a

Video Sitemap because it alerts us of any new or updated videos faster

and provides advanced functionality such as country and platform

restrictions.

Since this means that there are now a number of ways to tell Google

about your videos, choosing the right format can seem difficult. In

order to make the video indexing process as easy as possible, we’ve put

together a series of videos and articles about video indexing in our new

Webmasters EDU microsite.

For more information, you can go through the Webmasters EDU video articles, read the full schema.org VideoObject specification, or ask questions in the Webmaster Help Forum. We look forward to seeing more of your video content in Google Search.

Posted by Henry Zhang, Product Manager

Safely share access to your site in Webmaster Tools

We just launched a new feature that allows you as a verified site owner to grant limited access to your site's data and settings in Webmaster Tools. You've had the ability to grant full verified access to others for a couple of years. Since then we've heard lots of requests from site owners for the ability to grant limited permission for others to view a site's data in Webmaster Tools without being able to modify all the settings. Now you can do exactly that with our new User administration feature.

On the Home page when you click the "Manage site" drop-down menu you'll see the menu option that was previously titled "Add or remove owners" is now "Add or remove users."

Selecting the "Add or remove users" menu item will take you to the new User administration page where you can add or delete up to 100 users and specify each user's access as "Full" or "Restricted." Users added via the User administration page are tied to a specific site. If you become unverified for that site any users that you've added will lose their access to that site in Webmaster Tools. Adding or removing verified site owners is still done on the owner verification page which is linked from the User administration page.

Granting a user "Full" permission means that they will be able to view all data and take most actions, such as changing site settings or demoting sitelinks. When a user’s permission is set to "Restricted" they will only have access to view most data, and can take some actions such as using Fetch as Googlebot and configuring message forwarding for their account. Restricted users will see a “Restricted Access” indicator at various locations within Webmaster Tools.

To see which features and actions are accessible for Restricted users, Full users and site owners, visit our Permissions Help Center article.

We hope the addition of Full and Restricted users makes management of your site in Webmaster Tools easier since you can now grant access within a more limited scope to help prevent undesirable or unauthorized changes. If you have questions or feedback about the new User administration feature please let us know in our Help Forum.

Written by Jonathan Simon, Webmaster Trends Analyst

Keeping your free hosting service valuable for searchers

Webmaster level: Advanced

Free web hosting

services can be great! Many of these services have helped to lower

costs and technical barriers for webmasters and they continue to enable

beginner webmasters to start their adventure on the web. Unfortunately,

sometimes these lower barriers (meant to encourage less techy audiences)

can attract some dodgy characters like spammers who look for cheap and

easy ways to set up dozens or hundreds of sites that add little or no value

to the web. When it comes to automatically generated sites, our stance

remains the same: if the sites do not add sufficient value, we generally

consider them as spam and take appropriate steps to protect our users

from exposure to such sites in our natural search results.

If a free hosting service begins to show patterns of spam, we make a strong effort to be granular and tackle only spammy pages or sites. However, in some cases, when the spammers have pretty much taken over the free web hosting service or a large fraction of the service, we may be forced to take more decisive steps to protect our users and remove the entire free web hosting service from our search results. To prevent this from happening, we would like to help owners of free web hosting services by sharing what we think may help you save valuable resources like bandwidth and processing power, and also protect your hosting service from these spammers:

- Publish a clear abuse policy and communicate it to your users, for example during the sign-up process. This step will contribute to transparency on what you consider to be spammy activity.

- In your sign-up form, consider using CAPTCHAs or similar verification tools to only allow human submissions and prevent automated scripts from generating a bunch of sites on your hosting service. While these methods may not be 100% foolproof, they can help to keep a lot of the bad actors out.

- Try to monitor your free hosting service for other spam signals like redirections, large numbers of ad blocks, certain spammy keywords, large sections of escaped JavaScript code, etc. Using the site: operator query or Google Alerts may come in handy if you’re looking for a simple, cost efficient solution.

- Keep a record of signups and try to identify typical spam patterns like form completion time, number of requests sent from the same IP address range, user-agents used during signup, user names or other form-submitted values chosen during signup, etc. Again, these may not always be conclusive.

- Keep an eye on your webserver log files for sudden traffic spikes, especially when a newly-created site is receiving this traffic, and try to identify why you are spending more bandwidth and processing power.

- Try to monitor your free web hosting service for phishing and malware-infected pages. For example, you can use the Google Safe Browsing API to regularly test URLs from your service, or sign up to receive alerts for your AS.

- Come up with a few sanity checks. For example, if you’re running a local Polish free web hosting service, what are the odds of thousands of new and legitimate sites in Japanese being created overnight on your service? There’s a number of tools you may find useful for language detection of newly created sites, for example language detection libraries or the Google Translate API v2.

Last but not least, if you run a free web hosting service be sure to monitor your services for sudden activity spikes that may indicate a spam attack in progress.

For more tips on running a quality hosting service, have a look at our previous post. Lastly, be sure to sign up and verify your site in Google Webmaster Tools so we may be able to notify you when needed or if we see issues.

Written by Fili Wiese (Ad Traffic Quality Team) & Kaspar Szymanski (Search Quality Team)

Crawl Errors: The Next Generation

Webmaster level: All

Crawl errors is one of the most popular features in Webmaster Tools, and

today we’re rolling out some very significant enhancements that will

make it even more useful.

We now detect and report many new types of errors. To help make sense of the new data, we’ve split the errors into two parts: site errors and URL errors.

Site Errors

Site errors are errors that aren’t specific to a particular URL—they affect your entire site. These include DNS resolution failures, connectivity issues with your web server, and problems fetching your robots.txt file. We used to report these errors by URL, but that didn’t make a lot of sense because they aren’t specific to individual URLs—in fact, they prevent Googlebot from even requesting a URL! Instead, we now keep track of the failure rates for each type of site-wide error. We’ll also try to send you alerts when these errors become frequent enough that they warrant attention.Furthermore, if you don’t have (and haven’t recently had) any problems in these areas, as is the case for many sites, we won’t bother you with this section. Instead, we’ll just show you some friendly check marks to let you know everything is hunky-dory.

URL errors

URL errors are errors that are specific to a particular page. This means that when Googlebot tried to crawl the URL, it was able to resolve your DNS, connect to your server, fetch and read your robots.txt file, and then request this URL, but something went wrong after that. We break the URL errors down into various categories based on what caused the error. If your site serves up Google News or mobile (CHTML/XHTML) data, we’ll show separate categories for those errors.Less is more

We used to show you at most 100,000 errors of each type. Trying to consume all this information was like drinking from a firehose, and you had no way of knowing which of those errors were important (your homepage is down) or less important (someone’s personal site made a typo in a link to your site). There was no realistic way to view all 100,000 errors—no way to sort, search, or mark your progress. In the new version of this feature, we’ve focused on trying to give you only the most important errors up front. For each category, we’ll give you what we think are the 1000 most important and actionable errors. You can sort and filter these top 1000 errors, let us know when you think you’ve fixed them, and view details about them.Some sites have more than 1000 errors of a given type, so you’ll still be able to see the total number of errors you have of each type, as well as a graph showing historical data going back 90 days. For those who worry that 1000 error details plus a total aggregate count will not be enough, we’re considering adding programmatic access (an API) to allow you to download every last error you have, so please give us feedback if you need more.

We've also removed the list of pages blocked by robots.txt, because while these can sometimes be useful for diagnosing a problem with your robots.txt file, they are frequently pages you intentionally blocked. We really wanted to focus on errors, so look for information about roboted URLs to show up soon in the "Crawler access" feature under "Site configuration".

Dive into the details

Clicking on an individual error URL from the main list brings up a detail pane with additional information, including when we last tried to crawl the URL, when we first noticed a problem, and a brief explanation of the error.From the details pane you can click on the link for the URL that caused the error to see for yourself what happens when you try to visit it. You can also mark the error as “fixed” (more on that later!), view help content for the error type, list Sitemaps that contain the URL, see other pages that link to this URL, and even have Googlebot fetch the URL right now, either for more information or to double-check that your fix worked.

Take action!

One thing we’re really excited about in this new version of the Crawl errors feature is that you can really focus on fixing what’s most important first. We’ve ranked the errors so that those at the top of the priority list will be ones where there’s something you can do, whether that’s fixing broken links on your own site, fixing bugs in your server software, updating your Sitemaps to prune dead URLs, or adding a 301 redirect to get users to the “real” page. We determine this based on a multitude of factors, including whether or not you included the URL in a Sitemap, how many places it’s linked from (and if any of those are also on your site), and whether the URL has gotten any traffic recently from search.Once you think you’ve fixed the issue (you can test your fix by fetching the URL as Googlebot), you can let us know by marking the error as “fixed” if you are a user with full access permissions. This will remove the error from your list. In the future, the errors you’ve marked as fixed won’t be included in the top errors list, unless we’ve encountered the same error when trying to re-crawl a URL.

We’ve put a lot of work into the new Crawl errors feature, so we hope that it will be very useful to you. Let us know what you think and if you have any suggestions, please visit our forum!

Written by Kurt Dresner, Webmaster Tools team