Tuesday, February 26, 2013

Bing - Microsoft New Decision Engine

Microsoft is going to release a new search engine which is called Bing. Microsoft team is saying that "people would find a new search experience through Bing, so they are calling it as a decision engine.

People would get world class search experience through it which has

most latest features and for sure give fine results. In future people

would take decisions through Bing only."

Microsoft Bing Profiles on the web :

Few people are saying that Microsoft is giving old wine in new bottle. Bing would be same as Live search.

However few people are eagerly waiting for the new search experience

with Bing, why because people are got bored with google. Google is

Everywhere, Everyone looking for a change. Wait and see what's going to

happen.

Wolfram Alpha is completely different from Google

Recently on web, there are so many conversations are going regarding Wolfram Alpha. WolframAlpha is a new search engine which is going to be released on May 18th 2009. It's developed by Stephen Wolfram (creator of Mathematica).

Stephen wolfram planned to

release it on May 15, 2009. As planned they have released on the same

date, however due to over load they have shifted the public launch date

to May 18, 2009.

Do you know the caption of Wolfram Alpha, it was “computational search engine”.

It's completely a different concept of search. It's not at all a

competitor for Google or any other search engine. Till now I was in

assumption that it will occupy the position of google in future. Just

now I have watched a scree cast from Wolfram Alpha blog.

In

this video they have clearly explained that how it displays results for

different queries. It was really interesting. If you would like to

watch that video just click below. Wait for few mints it will take time

to load.

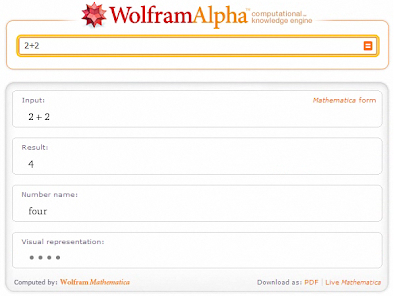

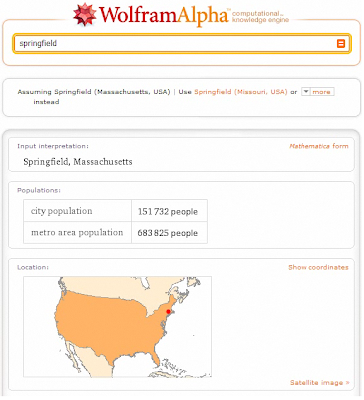

Here

I would like to place few screen shots of different searches of

Wolfram Alpha, so that you guys can have a better idea about this

computational search engine.

Search for "2+2" in WolframAlpha, to see the result click on the below image to have a larger view :

Search for "integrate x^2sin^3 x dx" in WolframAlpha, to see the result click on the below image to have a larger view:

Search for "gdp france" in WolframAlpha, to see the result click on the below image to have a larger view :

Search for "internet users in europe" in WolframAlpha, to see the result click on the below image to have a larger view :

Search for "springfield" in WolframAlpha, to see the result click on the below image to have a larger view :

Hope

you guys have some better understanding that how this search engine

will display results in a computed mathematical way. In the above video

every thing is explained well. Hope you have watched it and understand

the concept of Stephen Wolfram. Feel free write your thoughts in comments.

Tips to request reconsideration in Google

Most of the webmasters face the problem that their sites penalized by the Google. Before submitting, request for reconsideration, just have a look on the following video which is not more than 4 mints.

In this video Rachel Searles and Brian White who are working in Google Search Quality Team explained clearly about the process.

Discussion as follows in the video :

Brian Says :

Hi,

I am Brian White and this is my colleague Rachel and we are in the

Search Quality team in Google in the Web spam group. We are here today

with some tips on the reconsideration process.

Rachel Says:

All right, let's start with the case where you know your site has violated the Google guidelines,

it is important to admit any mistakes you've made and let us know what

you've done to try to fix them. Some times we get requests from people

who say: "My site adheres to the guidelines now," and that is not really

enough information for us. So, please be as detailed as possible.

Realize

that there are people actually reading these requests, including the

people in this room. If you do not know what your site might have done

to get a penalty, go back to the Google guidelines and reread them

carefully before requesting reconsideration.

Look at the things to avoid and ask questions of people that work on

your site, if you do not work on it yourself. If you'd like that advice

of a third party, you can also seek help in our google webmaster forum.

Brian Says:

Sometimes

we get reconsideration request or where the requester associates

technical website issues with a penalty. An example could be that the

server timed out for a while, or that bad content was delivered for a

time, and google is pretty adaptive to these kinds of transient issues

with websites. So people sometimes misread the situation as "I have a

penalty" and then seek reconsideration. And it is probably good idea to

wait a bit and see if things revert to their previous state. Some people

attribute duplicate content with leading to a penalty and usually the

problem lies elsewhere. And say you are in a partnership with someone

else, say another website, and they put this combined effort together in

a way that goes against our quality guidelines and involve both your

website and theirs and then the end effort reflects badly on both.

You

have control over your site, but sometimes it's hard to get stuff

cleaned up on sites you do not control. We are sympathetic to these

situations. Just make your best effort to document that in a complete

reconsideration request. In the case of say bad links that were

gathered, point us to a URL that shows your exhaustive effort to clean

that up. Also, we have pretty good tools internally, so do not try to

fool us as there are actual people, as Rachel said, looking at your

reports. If you intentionally pass along bad or misleading information,

we will disregard that request for reconsideration.

Rachel

: And please do not spam the reconsideration form, it does not help to

submit in multiple requests all at the same time, just one detailed

concise report and just get it right the first time. Your request will

be reviewed by a member of our team and we do review them promptly, that

said if you have some new information to add about your site, go ahead

and file a new reconsideration request. And finally, if reconsideration

does happen, please be aware that it can take some time to notice when a

penalty has been lifted.

Brian Says :

Yes,

the bottom line is we care very deeply for our search engine users, and

we want them to be happy and not have to complain. So make sure that

A) The issues are fixed with your site before filing reconsideration

B) Make sure that we don't have to worry about your site violating the quality guidelines in the future.

So, from the people who are part of the reconsideration process, from our end, thank you.

Rachel Says: Thank you

Friday, February 22, 2013

Reasons Behind Bad Seo Services

Recently Seo Scams

are getting increased day by day. Reading so many stories in the forums

by stating that I have cheated by this seo service. Few clients are

personally shared with me their experiences that how they have cheated

by the services.

I have did a little research

why all this was happening. At last i came to a conclusion now why this

is repeating. People who don't have a long vision would do these kind

of mistakes. They thought that their knowledge is enough to establish

and run a company. They never look and thought of long term results.

Feel that they could do it.

Professionals

with little experience who are working with a good seo companies will

come out side and establish an organization. Now they will look for

clients. Getting clients and satisfying them is not an ordinary matter,

so they will promise everything what the client asks while taking the

project. At the moment when they are taking the project they won't

bother about results. They are in a dilemma that they will try for it,

however they will promise to client that they will give it. At last they

will often fail and receives a bad reputation in the market.

Few

people will promise to the clients that they will get sales and leads

through SEO. SEO helps you in getting the leads and conversions, however

it won't give directly leads and conversions. That's a wrong statement.

SEO guys can promise about search engine friendly optimization, page

rank and SERP's. Search Engine Marketing professionals should promise

about leads and conversions.

For a successful online business an organization should have :

- SEO Professional

- SEM Professional

- SMO Professional

- Web Designer

- Programmer

- Quality Professional

- Leader

- Investor

To

run a successful business at least we should have the above

professionals. All those guys should have well expertise in those

respective fields then only organization will move towards success. I

request to the guys who are establishing new companies, Never start a

company with half knowledge. It's not good for you and also your

clients.

Google Plans for faster search using Ajax

Google is planning to display search results using Ajax, Currently results are coming through normal Html. In Feb 2009 read few posts stating that Google is testing new interface with the help of Ajax.

Use of Ajax in displaying

results is good, it makes search smoother and faster, However experts

says that it creates major problems for analytics tracking. Especially in referral string tracking.

Present normal process is for

example some one is searching for seo updates in Google, we will get the

following url in current process :

If Google have used Ajax in displaying search results then the url would be look like this :

Have you observed the difference

between the first and second url. In the second url we have got # in

the place of search? . It means browser won't send the code which is

after #. So Analytics not able to get the referrer string to track which

is after #. In result for all google visits what we get, analytics will

show only the home page of google.com instead of the complete referrer

string url. You never found that using which keyword the visitor came to

your site. This is the major problem in Ajax. It was clearly stated in

the clicky blog.

Google is stating that to come out with this problem, they will use gateway urls

for better tracking and faster search results. It seems Google team is

working on this from the past few months, hope they sorted out this

problem. Of course they weren't revealed it yet. Yesterday (Apr 14,

2008) in Google analytics blog they have posted that there is a change

in Google search referrals however it won't effect analytics tracking. Hope this is something related to Ajax feature, any ways we can't come to a conclusion until google announce it officially.

According to that blog post old Google.com search referrals seems following :

http://www.google.com/search?hl=en&q=seo updates&btnG=Google+Search

According to the change we will see something like this now :

http://www.google.com/url?sa=t&source=web&ct=res&cd=7&url=http%3A%2F%2Fwww.example.com%2Fmypage.htm&ei=0SjdSa-1N5O8M_qW8dQN&rct=j&q=flowers&usg=AFQjCNHJXSUh7Vw7oubPaO3tZOzz-F-u_w&sig2=X8uCFh6IoPtnwmvGMULQfw

Few months back Mattcutts

declared that they are testing new Ajax interface using javascript to

enhance search results. Google team is testing it on less search results

(Even less than 1%). They too don't know whether it's breaking

analytics or not. It's still in testing stage. Few users are getting the

test results, according to these test results they will experiment

every thing and will come to a conclusion that in what areas they need

to work out to fix everything.

Have a look on the following video that what Mattcutt says about 'Search using Ajax' :

Looking forward for Google faster and smoother search results using Ajax ..... :)

Does your website hacked?

Most of the webmasters are suffering with hacking and de-indexing problems. We need to take a lot of care while protecting a website even it is small or big.

To findout the problems why your

site is de-indexed or how hacked is little bit difficult and needs lot

of attention to find out the reason. Participate in Google webmaster groups, so that Google engineers might guide you in the right direction.

Actually when we will have a

doubt that whether our site is hacked or de-indexed from google, just

observe the following factors so that you will came to know the status.

- Website is ranked for spamy words

- Taking more loading time and getting error even host is good

- Suspicious messages from browsers

- Web pages disappeared from the Search engine results

- Homepage is De-indexed from Google

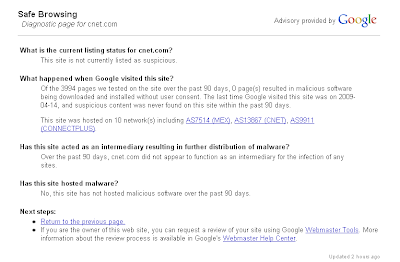

How to confirm that your site have suspicious content :

Google has released Safe Browsing Diagnostic Tool in Mar 2008. Using this tool you can check

- Present status of your site

- While visiting does google have any problem?

- Does your site distributing malware or not?

- Is your site hosted malware?

In the following URL just replace cnet.com with your site URL to get the results

Just have a check on your site

and let me if you have any problems and concerns. It looks as follows

. If your site is safe then that's good or else what's next step you

need to take (Described below the image)

If your site hacked then :

First step you need to take is

contact your hosting service and inform him to remove the malware from

the site. Then have a look on the following factors

- Hidden text and links

- Cloaking urls

- Automated requests to search engines

- Doorway pages

- Duplicate content

- Paid links

- Do follow links for ads

You must avoid all the above

techniques to get good rankings in search engines. Once you fixed all

these issues then go to the Google webmaster tools and request for re-inclusion. After few days your site will get back to the old position and some times even better positions too.

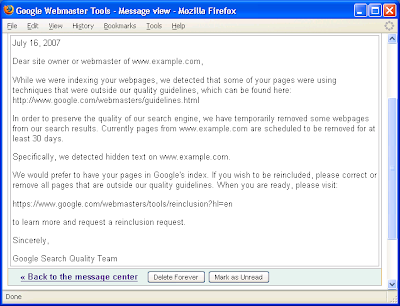

I hope everyone is using Google webmaster tools,

if not please start using it. why because you will get all the

information about your site that how Google is crawling your site, how

many pages are included, indexed, crawling issues, problems .....etc. So

as per the instructions in the account you will fix the issues and make

your site clean and perfect. Sometimes you will get a mail or message

from webmaster central team by stating about issues in your site. Would

you like to know how the mail looks like, here it is ...

Here is a small video where Mattcutt explains What we need to do before submitting re-inclusion request to Google about our site.

Effective way of Doing Social Bookmarking

Social Bookmarking

has it's own place in the internet even the social media is getting

raised. It's a process where people can find links and bookmark or

favorite those links and also can vote for the links. More votes for a

link means the popular bookmark it is.

Most of the guys even don't the right process that how to do social bookmarking.

It's also an art, if you know that how to perform the art perfectly

then your profile would be get more visits and your links would get more

votes.

I would like to explain you the right way that how you need to perform social bookmarking. Step by step described follow :

- Update entire profile will complete info which looks professional

- Search authorized profiles then vote and comment on their links

- Add Authorized profiles in to your friends

- Build a professional relationship with them

- First to vote and comment on their links

- In result you will get good comments and votes for your links

- Provide different kinds of good links which catches public interest.

List of Social Boookmarking sites :

- Digg.com

- StumbleUpon.com

- Technorati.com

- Del.icio.us

- Propeller.com

- simpy.com

- Slashdot.org .......... etc...

Main problem here is most of the

people will create profiles and submit their own website links again

and again in the same profile, it causes to ban account by the site

administrator and also you will get a bad impact in the internet world.

Hope you guys are clear with instructions if not let me know. So that i

can correct myself. Feel free to ask questions. Looking forward for your

comments.

Monday, February 4, 2013

Are shorten urls SEO friendly? …. Yes

Shorten URLs will be used especially at Social

Media sites to share web pages and interesting links. People are

spending hours and hours in front of Facebook, Twitter etc, so URL

Shortener services are increasing day by day. Most of people have this

question that whether these short URLs are SEO friendly or not.

Usually shorten URLs will redirect to

destination URLs. We need to find out whether these redirections are

permanent (301) or temporary (302). We have few free tools on the web to

check it. Yes, URL Shorten Services are using permanent 301 redirection

which is search engine friendly. Please have a look at the following

screenshot and video for better understanding.

Mattcutts explaining the same in the following video:

Google Webmaster Tools - SEO Updates 2012

I would like to highlight & include the

summaries of SEO Updates in 2012 which are published on Google webmaster

central official blog.

- Google https search is faster now on modern browser - Mar 19th 2012

- SEO Friendly Pagination algorithm update from Google - Mar 13th 2012

- Better Crawl Errors reporting in Google Webmaster tools - Mar 12th 2012

- Filtering Free hosting spam websites from search results - Mar 06th 2012

- User & Administrator access are now available in GWT - Mar 05th 2012

- Video markup language for better indexation of Videos - Feb 21st 2012

- Optimize your site to handle unexpected traffic growth - Feb 09th 2012

- Webmaster tools sitemaps interface with fresh look - Jan 26th 2012

- Few changes on Top search queries data in Webmaster tools - Jan 25th 2012

- Google Algorithm update to Page layout structure - Jan 19th 2012

- Page Titles Update in Search Results - Jan 12th 2012

1. Faster HTTPs Search:

Google made some changes to its https search. If you are logged in to your gmail account and searching for some thing on Google, When you click on a specific result for a keyword then you will reach that web page little faster compared to earlier https search. Google Team has reduced the latency of https search in modern browser like chrome.

2. SEO Friendly Pagination:

Most of the eCommerce and content portals will usually have pagination issue. Due to pagination most of the duplicate pages will be created with product titles in eCommerce portals and with article titles in content portals. Now Google came up with updated algorithm i.e. following are the two ways to fix pagination issue.

- Insert View all page and use rel="canonical" in sub pagination pages

- Use rel="prev" and rel="next"

3. Upgraded Crawl Errors Reporting:

Crawl Errors is a feature in Webmaster tools which will report all errors in your website. Google webmaster team started detecting new errors and reporting all errors in a better way. Categorized crawl errors in to two types i.e. "Site Errors" and "URL Errors".

4. Filtering Free hosting spam websites:

We know that "few of the hosting providers will offer free hosting services". Due to free hosting most of the spammers will create low quality content websites dynamically. Google started filtering this kind of websites from search results and also requesting free hosting providers to show patterns of spam to Google team, so that Google team can effectively update their algorithm and filter these spam sites from search results effectively.

5. Now Webmaster tools have both admin & user access:

Earlier we do not have an option to give user access to our webmaster tools account to any other user. Only admin access should be shared to other users, which isn't safe. With the latest update we can give webmaster tools user access to other users now onwards.

6. Schema.org Video Markup:

We all know that Google, Yahoo & Bing

are supporting schema.org common markup language to make a better web. Now schema.org

released mark up for Videos i.e. http://schema.org/VideoObject.

Follow this markup while adding videos to your site. It will help search

engines to crawl these videos effectively.

Usually popular sites face downtime issues

due to sudden increase in traffic, websites will crash often in such cases. So,

it’s better to plan “lightweight version of the website or mobile version” to

handle traffic in difficult times. Also we can use third party services to land

that particular page which is receiving higher traffic.

Earlier sitemap section used have normal

interface with some basic content stats. Now added videos, images sitemap

statistics with colorful interface. Compared to old interface, new interface

looks pretty clean and easier to understand with better usability. Now users

can delete sitemaps, which were submitted by another webmaster owner.

Normally in webmaster tools search queries

data rankings will show average position of the keyword. Now onwards it will

display top position of that keyword.

We often see websites with too many ads and

it’s difficult to find out the difference between real content and ads. Few

sites will have completely ads about the fold, users will have bad experience

with these sites. As per new page layout algorithm, these sites will lose

rankings and bring down from top search engine results.

Page titles will have higher impact in CTR.

Usually Google pick the title tag from tag and displays the same

in search results and displays meta description based on the search query by

collecting information from page or description tag. Now title tag also will be

displayed based on search query of the visitor and it helps to improve click

through rate on search results.</span></div>

Subscribe to:

Comments (Atom)